- re-sampling

- synthetic samples: generate more samples for minor classes

- re-weighting

- few-shot learning

- decoupling representation and classifier learning: use normal sampling in the feature learning stage and use re-sampling in the classifier learning stage.

Causal Inference

Big Names: Judy Pearl [Tutorial] [slides] [textbook], James Robin [Textbook] [slides]

Tutorial:

- Causality for machine learning [4]

- Towards Causal Representation Learning [8]

- A briefing on causal inference written by myself

Workshop: NIPS2018 workshop on causal learning, KDD2020 Tutorial on Causal Inference Meets Machine Learning

Material: MILA Course

Causality and disentanglement: [5] [6]

Counterfactual and disentanglement: [7]

Reference

[1] Chalupka K, Perona P, Eberhardt F. Visual causal feature learning. arXiv preprint arXiv:1412.2309, 2014.

[2] Lopez-Paz D, Nishihara R, Chintala S, et al. Discovering causal signals in images. CVPR, 2017.

[3] Bau D, Zhu J Y, Strobelt H, et al. GAN Dissection: Visualizing and Understanding Generative Adversarial Networks. arXiv preprint arXiv:1811.10597, 2018.

[4] Bernhard Schölkopf: CAUSALITY FOR MACHINE LEARNING. arXiv preprint arXiv:1911.10500, 2019.

[5] Kim, Hyemi, et al. “Counterfactual Fairness with Disentangled Causal Effect Variational Autoencoder.” arXiv preprint arXiv:2011.11878 (2020).

[6] Shen, Xinwei, et al. “Disentangled Generative Causal Representation Learning.” arXiv preprint arXiv:2010.02637 (2020).

[7] Yue, Zhongqi, et al. “Counterfactual Zero-Shot and Open-Set Visual Recognition.” arXiv preprint arXiv:2103.00887 (2021).

[8] Schölkopf, Bernhard, et al. “Towards causal representation learning.” arXiv preprint arXiv:2102.11107 (2021).

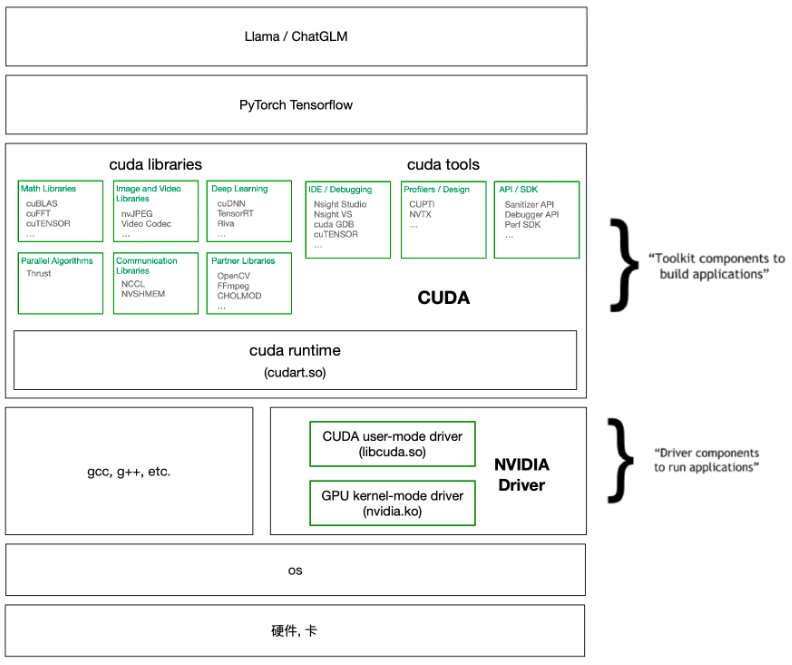

GPU Cuda and CuDNN

GPU

look up GPU information:

lspciorlshw -C displayNVIDIA system management interface, monitor GPU usage:

nvidia-smi(GPU driver version and CUDA user-mode version)

GPU Driver

check the latest driver information on http://www.nvidia.com/Download/index.aspx. Then, look up driver information on local machine:

cat /proc/driver/nvidia/versioncheck the compatibility between CUDA runtime version and driver version: https://docs.nvidia.com/deploy/cuda-compatibility/

Install NVIDIA GPU driver using GUI: Software & Updates -> Additional Drivers

Install NVIDIA GPU driver using apt-get

1

2

3sudo add-apt-repository ppa:Ubuntu-x-swat/x-updates

sudo apt-get update

sudo apt-get install nvidia-current nvidia-current-modaliases nvidia-settingsInstall NVIDIA GPU driver using *.run file downloaded from http://www.nvidia.com/Download/index.aspx

- Hit CTRL+ALT+F1 and login using your credentials.

- Stop your current X server session by typing

sudo service lightdm stop - Enter runlevel 3 by typing

sudo init 3and install your *.run file. - You might be required to reboot when the installation finishes. If not, run

sudo service lightdm startorsudo start lightdmto start your X server again.

CUDA

When using anaconda to install deep learning platform, sometimes it is unnecessary to install CUDA by yourself.

Preprocessing

- uninstall the GPU driver first:

sudo /usr/bin/nvidia-uninstallorsudo apt-get remove --purge nvidia*andsudo apt-get autoremove;sudo reboot - blacklist nouveau: add “blacklist nouveau” and “options nouveau modeset=0” at the end of /etc/modprobe.d/blacklist.conf;

sudo update-initramfs -u;sudo reboot - Stop your current X server session:

sudo service lightdm stop

- uninstall the GPU driver first:

Install Cuda

Download the *.run file from NVIDIA website

- The latest version: https://developer.nvidia.com/cuda-downloads

All versions: https://developer.nvidia.com/cuda-toolkit-archive

1

sudo sh cuda_10.0.130_410.48_linux.run

and then add into PATH and LD_LIBRARY_PATH

1

2

3echo 'export PATH=/usr/local/cuda/bin:$PATH' >> ~/.bashrc

echo 'export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH' >> ~/.bashrc

source ~/.bashrc

check Cuda version after installation:

nvcc -V. Compile and run the cuda samples.

CuDNN

CuDNN is to accelerate Cuda, from https://developer.nvidia.com/rdp/form/cudnn-download-survey, just download compressed package.

1 | cd $CUDNN_PATH |

Illumination Model

Dichromatic Reflection Model [1] [2] , in which is the pixel index, is the global illumination, is the sensor sensitivity. The chromatic terms and account for body and surface reflection, which are only related to object material.

gray pixels: pixels with equal RGB values. detecting gray pixels in a color-biased image is not easy. [3]

Reference

[1] Shafer, Steven A. “Using color to separate reflection components.” Color Research & Application 10.4 (1985): 210-218.

[2] Song, Shuangbing, et al. “Illumination Harmonization with Gray Mean Scale.” Computer Graphics International Conference. Springer, Cham, 2020.

[3] Qian, Yanlin, et al. “On finding gray pixels.” CVPR, 2019.

[4] Bhattad, Anand, and David A. Forsyth. “Cut-and-Paste Neural Rendering.” arXiv preprint arXiv:2010.05907 (2020).

[5] Yu, Ye, and William AP Smith. “InverseRenderNet: Learning single image inverse rendering.” CVPR, 2019.

Camera Survey

Interface Type:

GigE and USB interfaces are commonly used. The advantage of GigE is long-distance transmission.

Color v.s. Monochrome

When the exposure begins, each photosite is uncovered to collect incoming light. When the exposure ends, the occupancy of each photosite is read as an electrical signal, which is then quantified and stored as a numerical value in an image file.

Unlike color sensors, monochrome sensors capture all incoming light at each pixel regardless of color.

Unlike with color, monochrome sensors also do not require demosaicing to create the final image because the values recorded at each photosite effectively just become the values at each pixel. As a result, monochrome sensors are able to achieve a slightly higher resolution.

Sensor Type:

- CCD (Charged Coupling Devices): special manufacturing process that allows the conversion to take place in the chip without distortion, which makes them more expensive. CCD can capture high-quality image with low noise and is sensitive to light.

- CMOS (Complimentary Metal Oxide Semiconductor): use transistors at each pixel to move the charge through traditional wires. Traditional manufacturing processes are used to make CMOS, which is the same as creating microchips. CMOS is cheaper and has low power consumption

Readout Method:

Global v.s. rolling shutter: originally, CCD uses global shutter while CMOS uses rolling shutter. Rolling shutter is always active and rolling through the pixels line by line from top to bottom. In contrast, global shutter stores their electrical charges and reads out when the shutter is closed and the pixel is reset for the next exposure, allowing the entire sensor area to be output simultaneously. Nowadays, CMOS can also have global shutter capabilities.

Advantage of global shutter: global shutter can manage motions and pulsed light conditions rather well as the scene is viewed or exposed at one moment in time by enabling synchronous timing of the light or motion to the open shutter phase. However, rolling shutter can also manage motions and pulsed light conditions to an extent through a combination of fast shutter speeds and timing of the light source.

Quantum Efficiency

The ability of a pixel to convert an incident photon to charge is specified by its quantum efficiency. For example, if for ten incident photons, four photo-electrons are produced, then the quantum efficiency is 40%. Typical values of quantum efficiency are in the range of 30 - 60%. The quantum efficiency depends on wavelength and is not necessarily uniform over the response to light intensity.

Field of View

FOV (Field of View) depends on the lens size. Generally, larger sensors yield greater FOV.

Pixel Size

A small pixel size is desirable because it results in a smaller die size and/or higher spatial resolution; a large pixel size is desirable because it results in higher dynamic range and signal-to-noise ratio.

Zoom in

(1) Zoom in a bounding box [1] [2]

(2) Zoom in salient region [3] [4]

- relation to (1): if the salience region is rectangle and salience value is infinity, this should be equivalent to zooming in a bounding box.

- relation to pooling: weighted pooling with salience map as weight map

- relation to deformable CNN: use salience map to calculate offset for each position

Reference

[1] Fu, Jianlong, Heliang Zheng, and Tao Mei. “Look closer to see better: Recurrent attention convolutional neural network for fine-grained image recognition.” CVPR, 2017.

[2] Zheng, Heliang, et al. “Learning multi-attention convolutional neural network for fine-grained image recognition.” ICCV, 2017.

[3] Recasens, Adria, et al. “Learning to zoom: a saliency-based sampling layer for neural networks.” ECCV, 2018.

[4] Zheng, Heliang, et al. “Looking for the Devil in the Details: Learning Trilinear Attention Sampling Network for Fine-grained Image Recognition.” arXiv preprint arXiv:1903.06150 (2019).

Zero-Shot Semantic Segmentation

Reference

[1] Rohan Doshi, Olga Russakovsky, “zero-shot semantic segmentation”, bachelor thesis.

[2] Y. Xian, S. Choudhury, Y. He, B. Schiele and Z. Akata , “SPNet: Semantic Projection Network for Zero- and Few-Label Semantic Segmentation”, CVPR, 2019.

[3] Maxime Bucher, Tuan-Hung Vu, Matthieu Cord, Sorbonne, Patrick Pérez, “Zero-Shot Semantic Segmentation”, 2019

[4] Kato, Naoki, Toshihiko Yamasaki, and Kiyoharu Aizawa. “Zero-Shot Semantic Segmentation via Variational Mapping.” ICCV Workshops. 2019.

Zero-Shot Object Detection

ZSL based on bounding box features

End-to-end zero-shot object detection

Feature generation

Reference

[1] Ankan Bansal, Karan Sikka, Gaurav Sharma, Rama Chellappa, Ajay Divakaran, “Zero-Shot Object Detection”, ECCV, 2018.

[2] Pengkai Zhu, Hanxiao Wang, and Venkatesh Saligrama, “Zero Shot Detection”, T-CSVT, 2019.

[3] Rahman, Shafin, Salman Khan, and Fatih Porikli. “Zero-shot object detection: Learning to simultaneously recognize and localize novel concepts.” arXiv preprint arXiv:1803.06049 (2018).

[4] Rahman, Shafin, Salman Khan, and Nick Barnes. “Polarity Loss for Zero-shot Object Detection.” arXiv preprint arXiv:1811.08982 (2018).

[5] Demirel, Berkan, Ramazan Gokberk Cinbis, and Nazli Ikizler-Cinbis. “Zero-Shot Object Detection by Hybrid Region Embedding.” arXiv preprint arXiv:1805.06157 (2018).

[6] Hayat, Nasir, et al. “Synthesizing the unseen for zero-shot object detection.” Proceedings of the Asian Conference on Computer Vision. 2020.

[7] Yan, Caixia, et al. “Semantics-preserving graph propagation for zero-shot object detection.” IEEE Transactions on Image Processing 29 (2020): 8163-8176.

Zero-Shot Learning

Zero-shot learning focuses on the relation between visual features X, semantic embeddings A, and category labels Y. Based on the approach, existing zero-shot learning works can be roughly categorized into the following groups:

1) semantic relatedness: X->Y (semantic similarity; write classifier)

2) semantic embedding: X->A->Y (map from X to A; map from A to X; map between A and X into common space)

Based on the setting, existing zero-shot learning works can be roughly categorized into the following groups:

1) inductive ZSL (do not use unlabeled test images in the training stage) v.s. semi-supervised/transductive ZSL (use unlabeled test images in the training stage)

2) standard ZSL (test images only from unseen categories) v.s. generalized ZSL (test images from both seen and unseen categories) (novelty detection, calibrated stacking)

Ideas:

Mapping: dictionary learning, metric learning, etc

Embedding: multiple embedding [1], free embedding [1], self-defined embedding [1]

Application: video->object(attribute)->action [1], image->object(attribute)->scene

Combination: with active learning [1] [2], online learning [1]

Generate synthetic exemplars for unseen categories: synthetic images [SP-AEN] or synthetic features [SE-ZSL] [GAZSL] [f-xGAN]

Critical Issues:

generalized ZSL, why first predict seen or unseen?: As claimed in [1], since we only see labeled data from seen classes, during training, the scoring functions of seen classes tend to dominate those of unseen classes, leading to biased predictions in GZSL and aggressively classifying a new data point into the label space of S because classifiers for the seen classes do not get trained on negative examples from the unseen classes.

hubness problem [1][2]: As claimed in [2], one practical effect of the ZSL domain shift is the Hubness problem. Specifically, after the domain shift, there are a small set of hub test-class prototypes that become nearest or K nearest neighbours to the majority of testing samples in the semantic space, while others are NNs of no testing instances. This results in poor accuracy and highly biased predictions with the majority of testing examples being assigned to a small minority of classes.

projection domain shift: what is the impact on the decision values?

Datasets:

Survey and Resource:

Other applications:

- zero-shot object detection

- zero-shot figure-ground segmentation [1]

- zero-shot semantic segmentation

- zero-shot retrieval

- zero-shot domain adaptation

Word Vector

Survey

For a brief survey summarizing skip-gram, CBOW, GloVe, etc, please refer to this.

Code

word2vec: TensorFlow

GloVe: C, TensorFlow

WikiCorpus

Download the WikiCorpus and use the shellscript to process (e.g., remove numbers, invalide chars, urls), leading to sequence of pure words.

Resources

- English word vectors: https://github.com/3Top/word2vec-api

- Non-English word vectors: https://github.com/Kyubyong/wordvectors